Email:

liuyangzhuan@lbl.gov

Address:

MS 50A-3111, 1 Cyclotron Road

Berkeley, CA 94720

Phone:

734-546-7392

Google Scholar:

[Here]

Numerical Linear Algebra and Direct Solvers

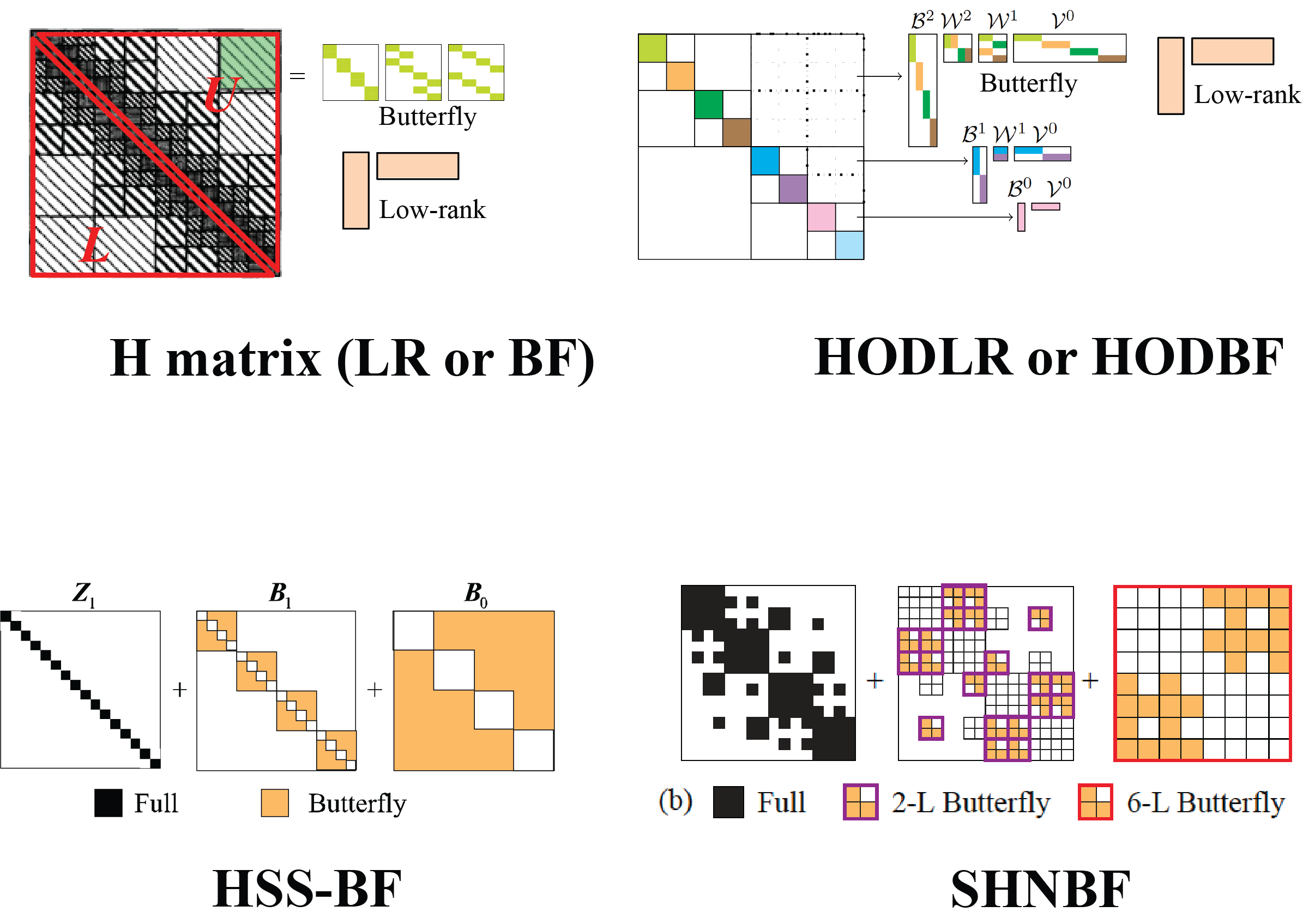

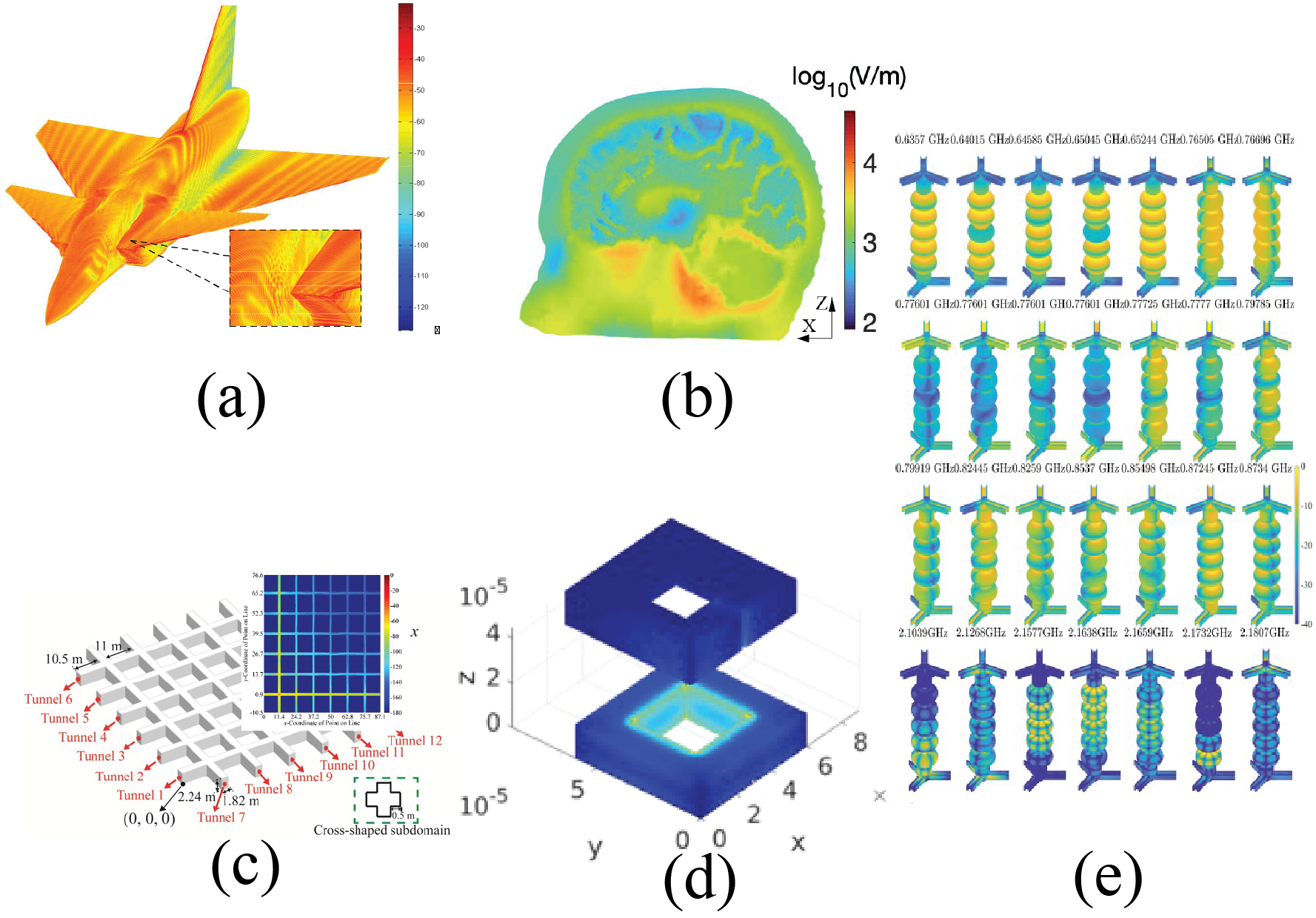

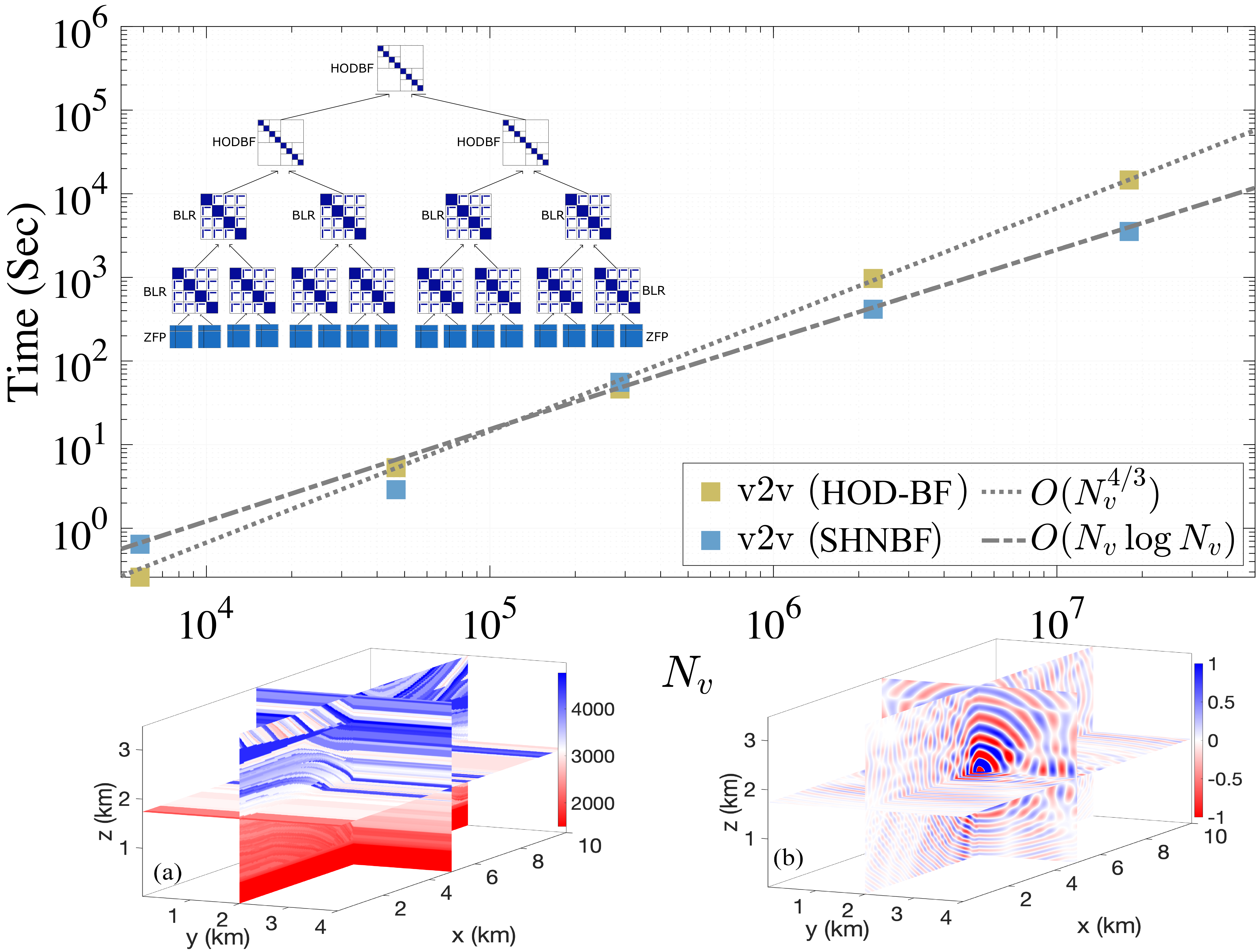

- Randomized butterfly factorization-based direct solution of the frequency-domain integral equations (IE) and differential equations (DE). Direct IE solutions for high-frequency Helmholtz problems is attractive when the system matrix becomes ill-conditioned (e.g., due to low-frequency, dense-mesh breakdown or near resonance phenomena) or the system involves many right-hand sides, in which cases iterative methods fail to provide efficient solutions. However, development of a low-complexity direct solver for high frequency problems is a challenging task as past direct solvers were low-rank factorization-based and lead to the eventual O(N^3) computational complexity for such problems. This work develops butterfly factorization-based direct solvers that construct butterfly-compressed H-matrices, HODLR, and HSS matrices and compute the matrix inverse via randomized butterfly schemes. These solvers exhibit quasi-optimal CPU and memory complexities when applied to large complex-shaped objects. The above-mentioned solvers have also been combined with a multi-frontal sparse matrix solver STRUMPACK to solve high-frequency problems from DE formulations.

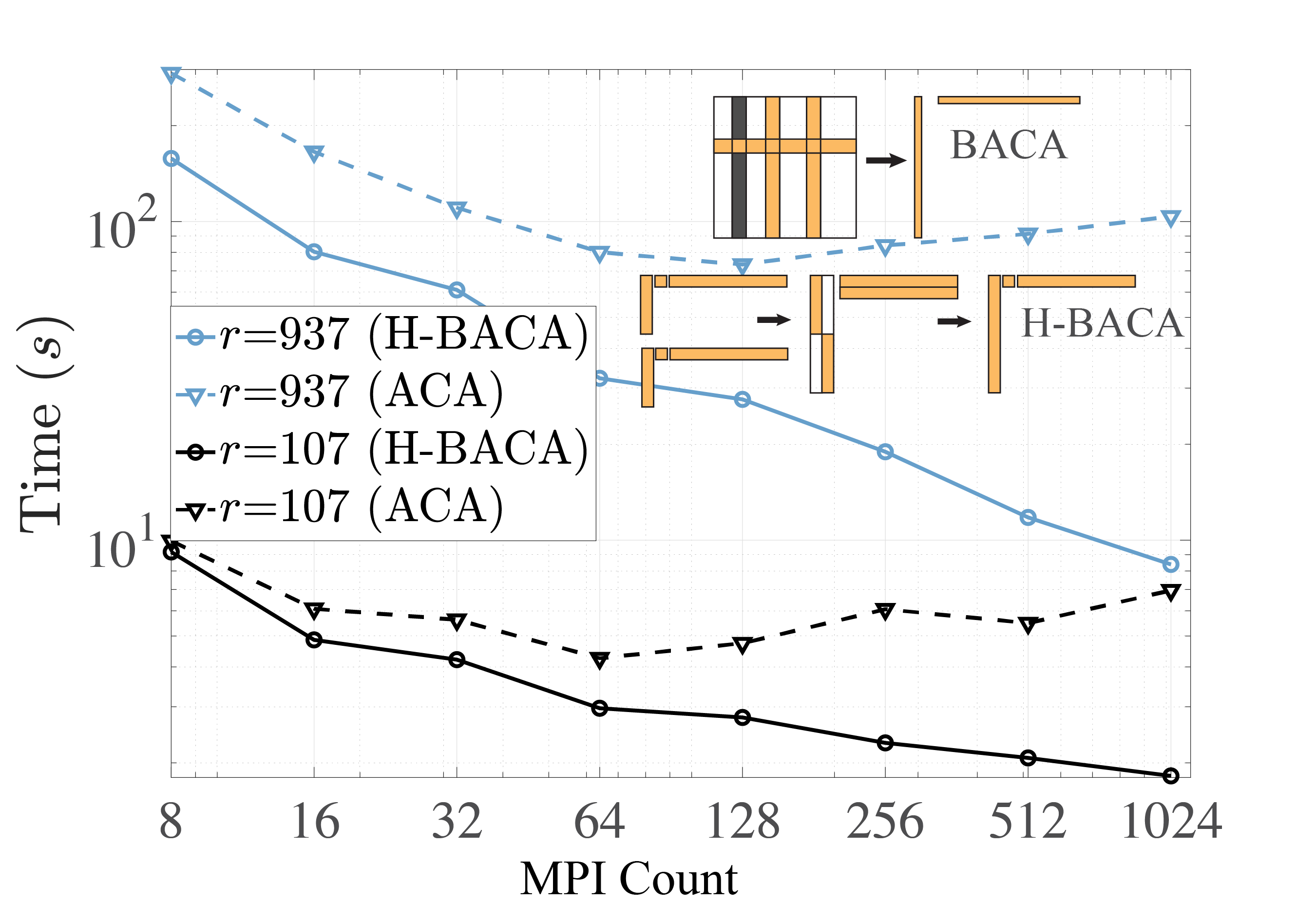

- Low-rank and tensor algorithms for rank-structured linear systems in PDE. In addition to Helmholtz equations, many scientific and engineering applications require solving/applying large linear/multi-linear systems/operators that exhibit hierarchical rank structures. These systems can be solved in quasi-linear time with hierarchical matrix, and randomized low-rank and tensor techniques. I developed fast algorithms such as hierarchical blocked adaptive cross approximation (H-BACA), sketching-based hierarchical matrix construction, new tensor algorithms for high-dimensional problems to rapidly construct, factor or solve these computationally demanding operators. These algorithms have been applied to accelerate wave-equations, kernel ridge regression, reaction-diffusion equations, fractional Laplacian, incompressible Navier-Stokes equations, and quantum chemistry applications.

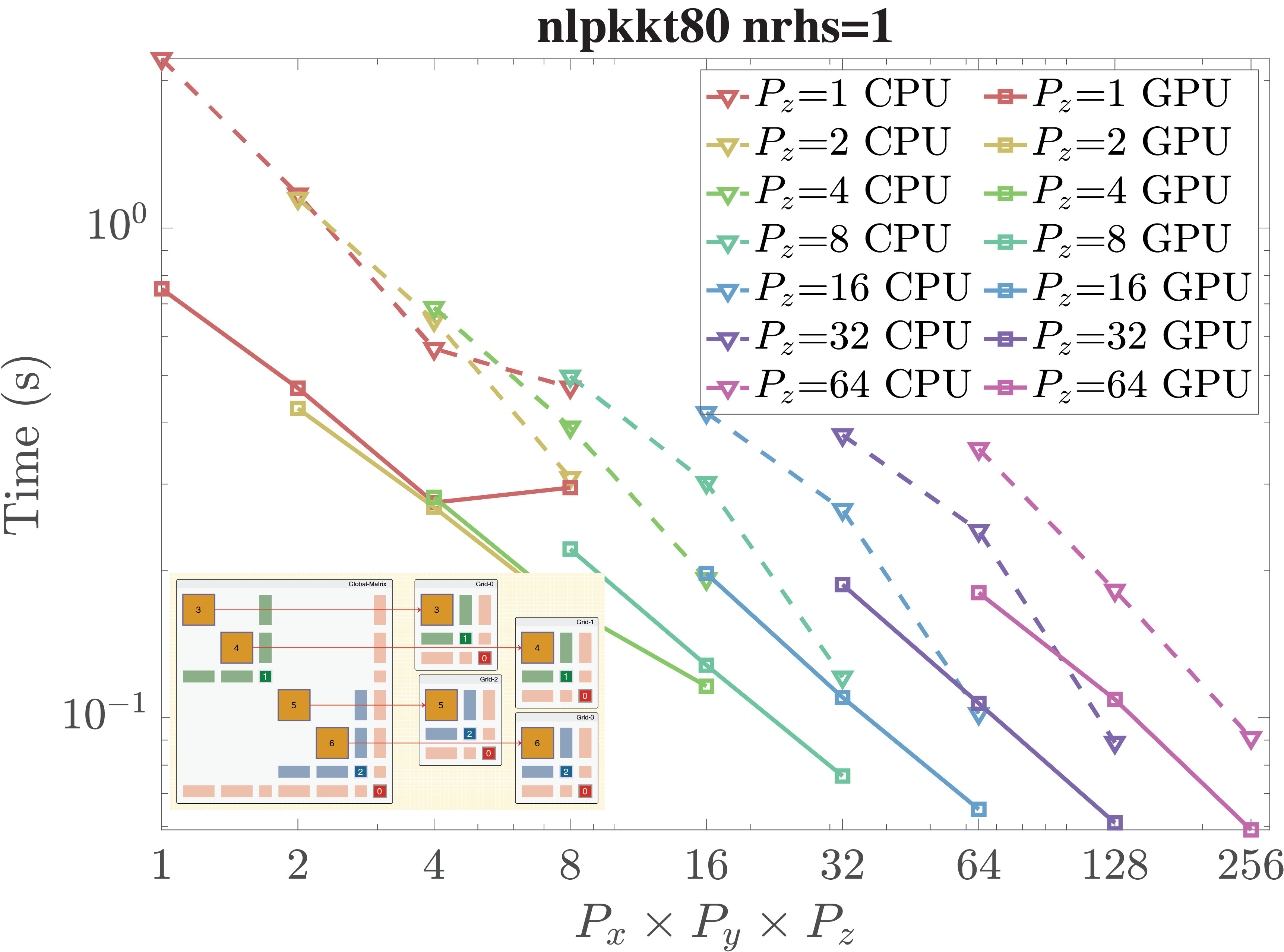

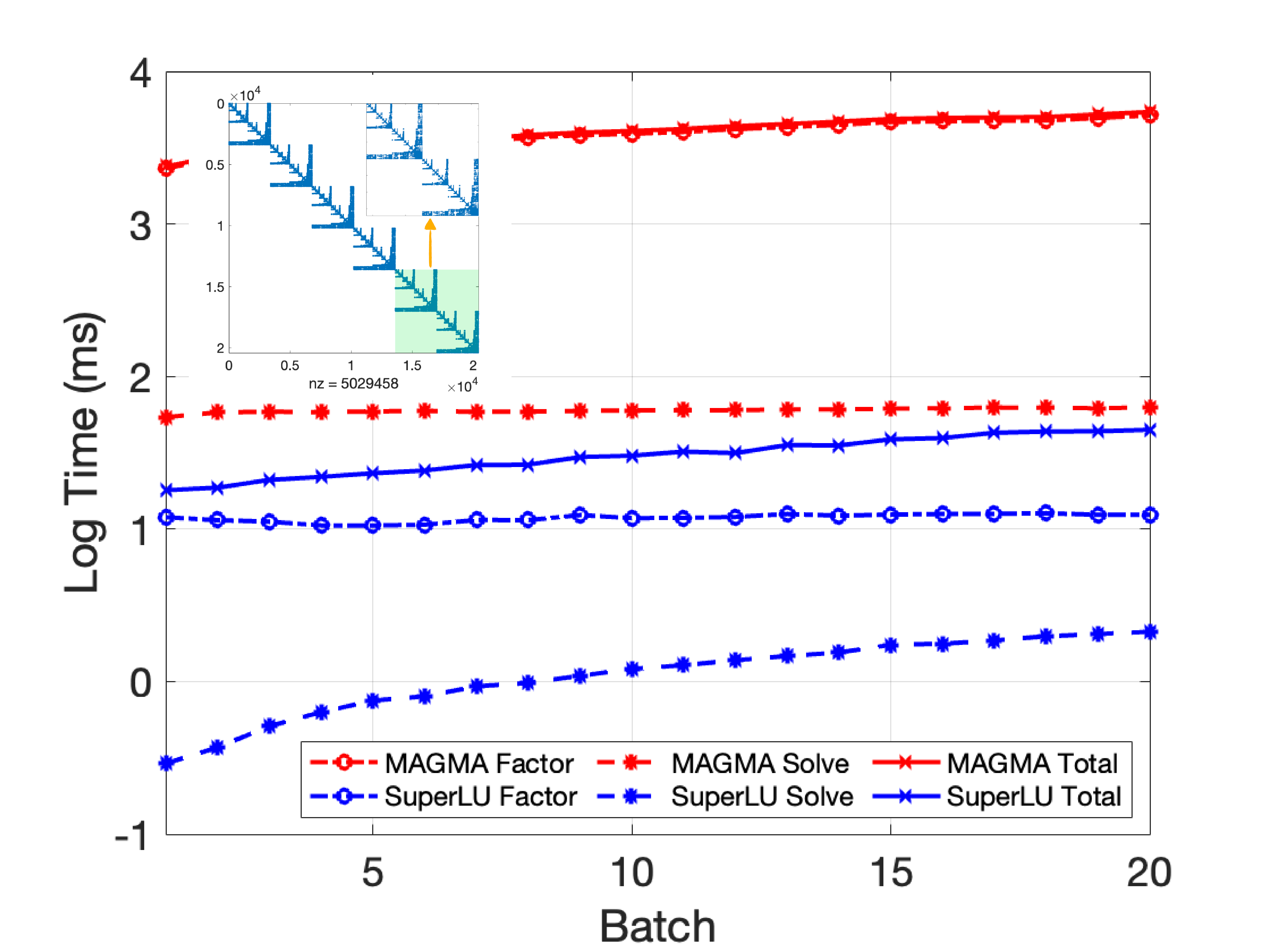

- Highly-scalable supernodal-based sparse direct solver. Supernodal-based sparse direct solvers, such as SuperLU_DIST, are widely adopted in many scientific and engineering applications. Their typical use patterns include single and repetitive factorization/solve, block preconditioner in Krylov methods, and batched factorization/solve on single or many CPU/GPU nodes. I developed advanced communication-avoiding/optimized factorization and solve algorithms for large-scale CPU and GPU clusters. These new algorithms have been demonstrated with superior parallel scalability on CPU and GPU machines for many applications such as fusion simulation, power grid optimization, and nuclear physics applications. These new developments have been integrated into the sparse solver SuperLU_DIST.

Algorithm developments for direct solvers: (top left) butterfly and low-rank direct solvers in ButterflyPACK, (top middle) H-BACA algorithm, (top right) applications of the IE solvers in ButterflyPACK, (bottom left) seismic modeling with ButterflyPACK and STRUMPACK, (bottom middle) strong scaling of sparse CPU/GPU triangular Solve in SuperLU_DIST, (bottom right) time scaling with batch count for SuperLU_DIST batched factor and solve algorithms. (Click on the figures to zoom in).

Uncertainty Quantification and Machine Learning

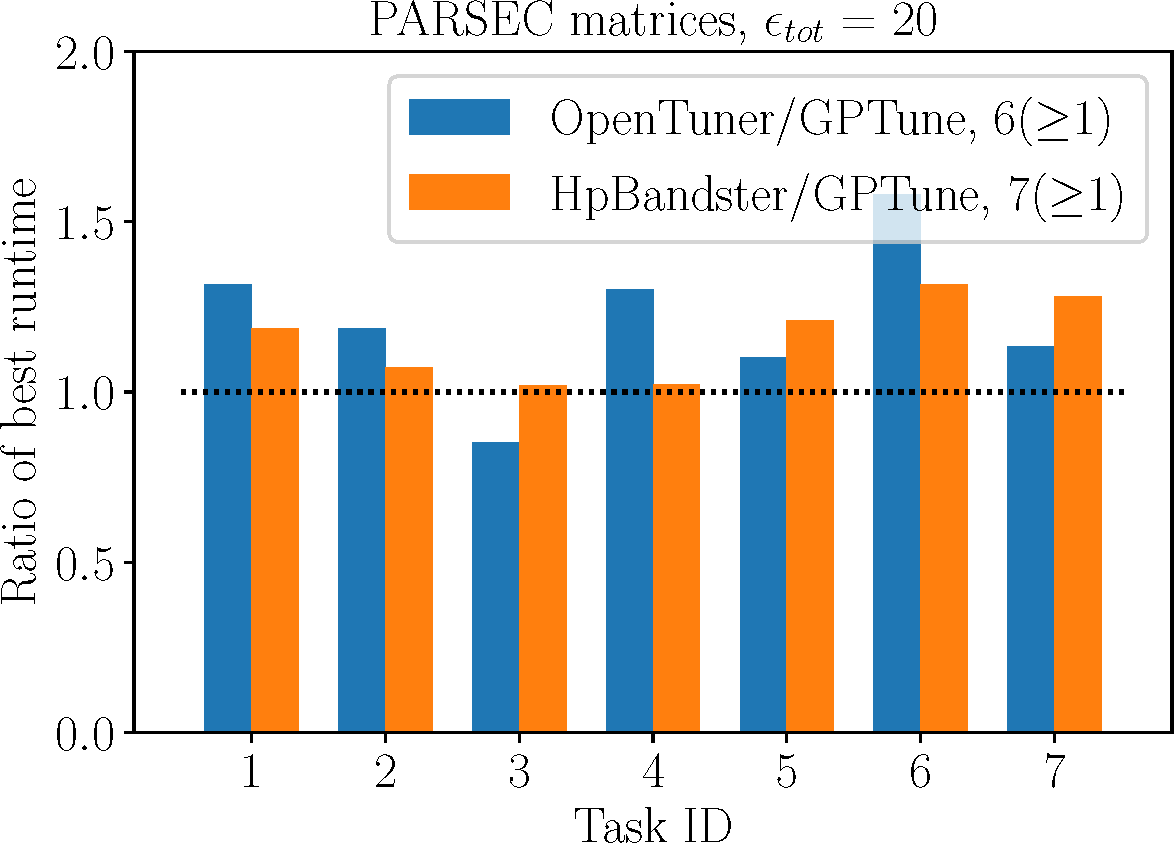

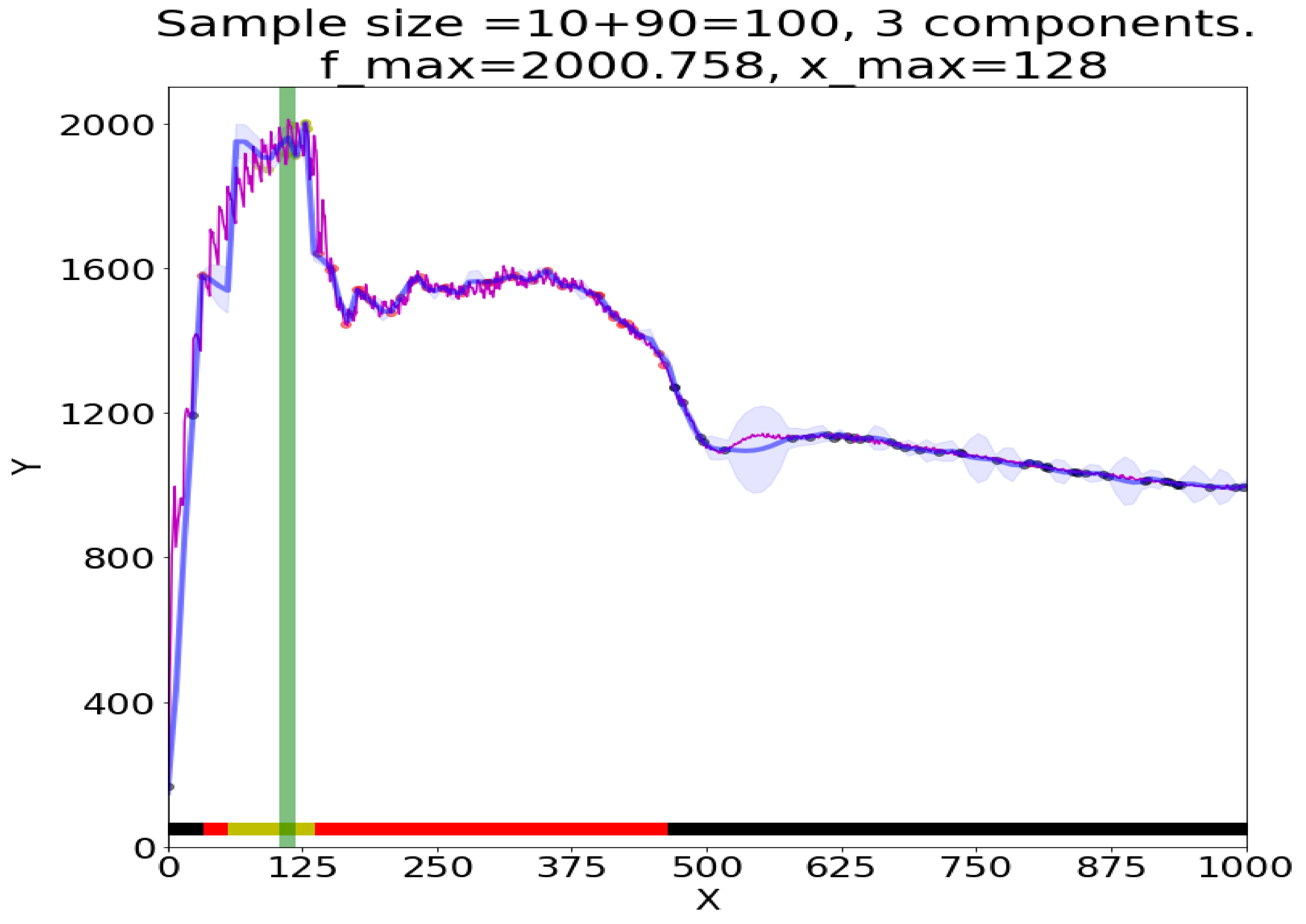

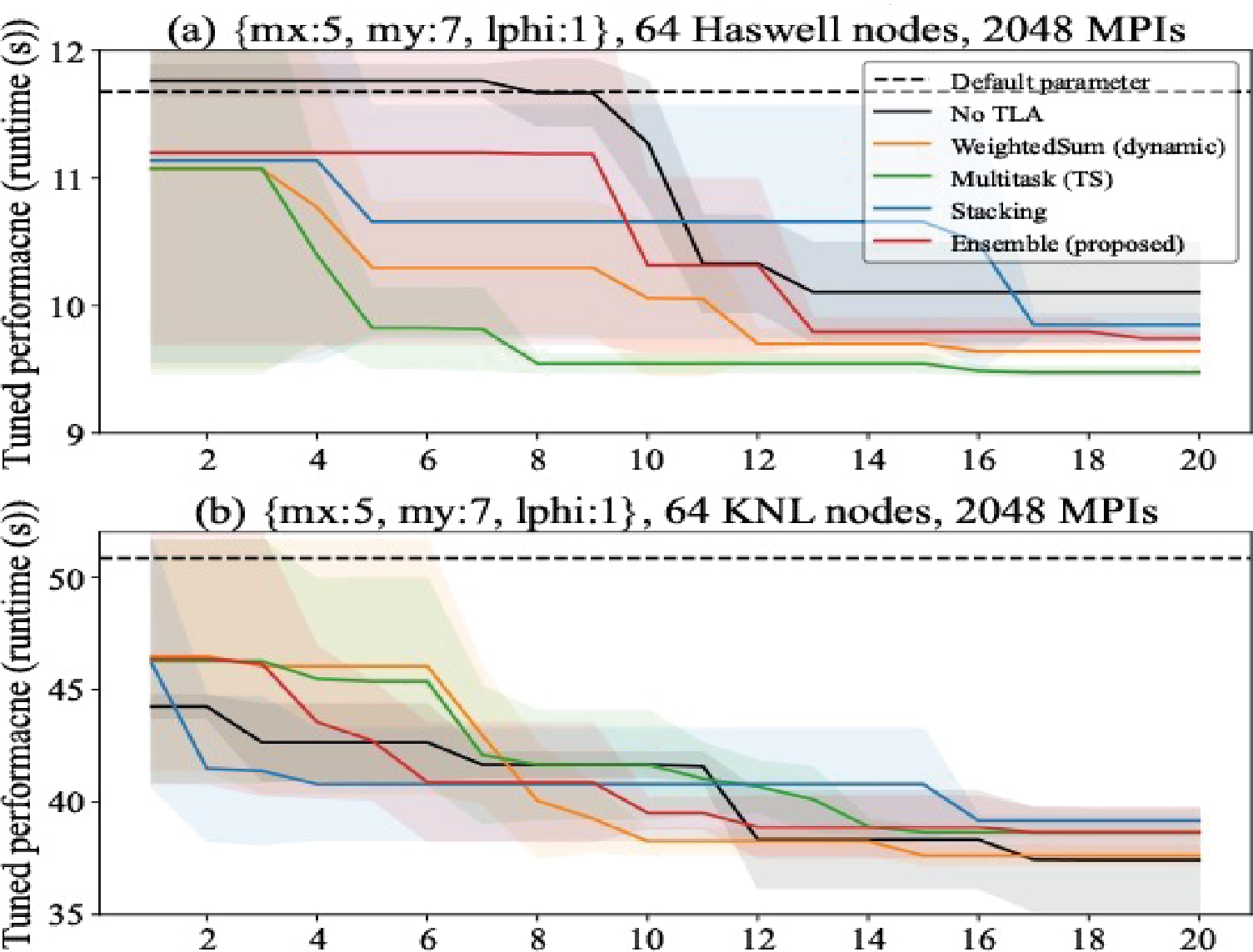

- Gaussian process (GP)-based autotuning framework for exascale and machine learning applications. The performance (e.g., runtime, memory, communication, accuracy) of high-performance computing (HPC) applications can vary significantly subject to a selected number of tuning parameters of real, integer and categorical types. However, even a single evaluation can require long execution time using many compute nodes. The GP frameworks are suitable solutions for autotuning HPC applications as they require a relatively small number of function evaluations to build surrogate models of the application function. Our work extends and improves traditional GP frameworks by considering multi-task and transfer learning algorithms using the localized coregionalization model (LCM). The resulting GPTune tool and its variants outperform existing single-task tuners for many exascale applications such as SuperLU_DIST, Hypre, ScaLAPACK, NIMROD, M3DC1, STRUMPACK, MFEM, etc. A few advanced features to note: GPTune can leverage shared and distributed-memory parallelism to accelerate the surrogate modeling, utilize historical and cloud databases to incorporate more data, combine with multi-arm bandit strategies (GPTuneBand) for multi-fidelity tuning, leverage clustered GP algorithms (cGP) for better modeling of non-smooth functions, support multi-objective tuning and incorporation of coarse performance models.

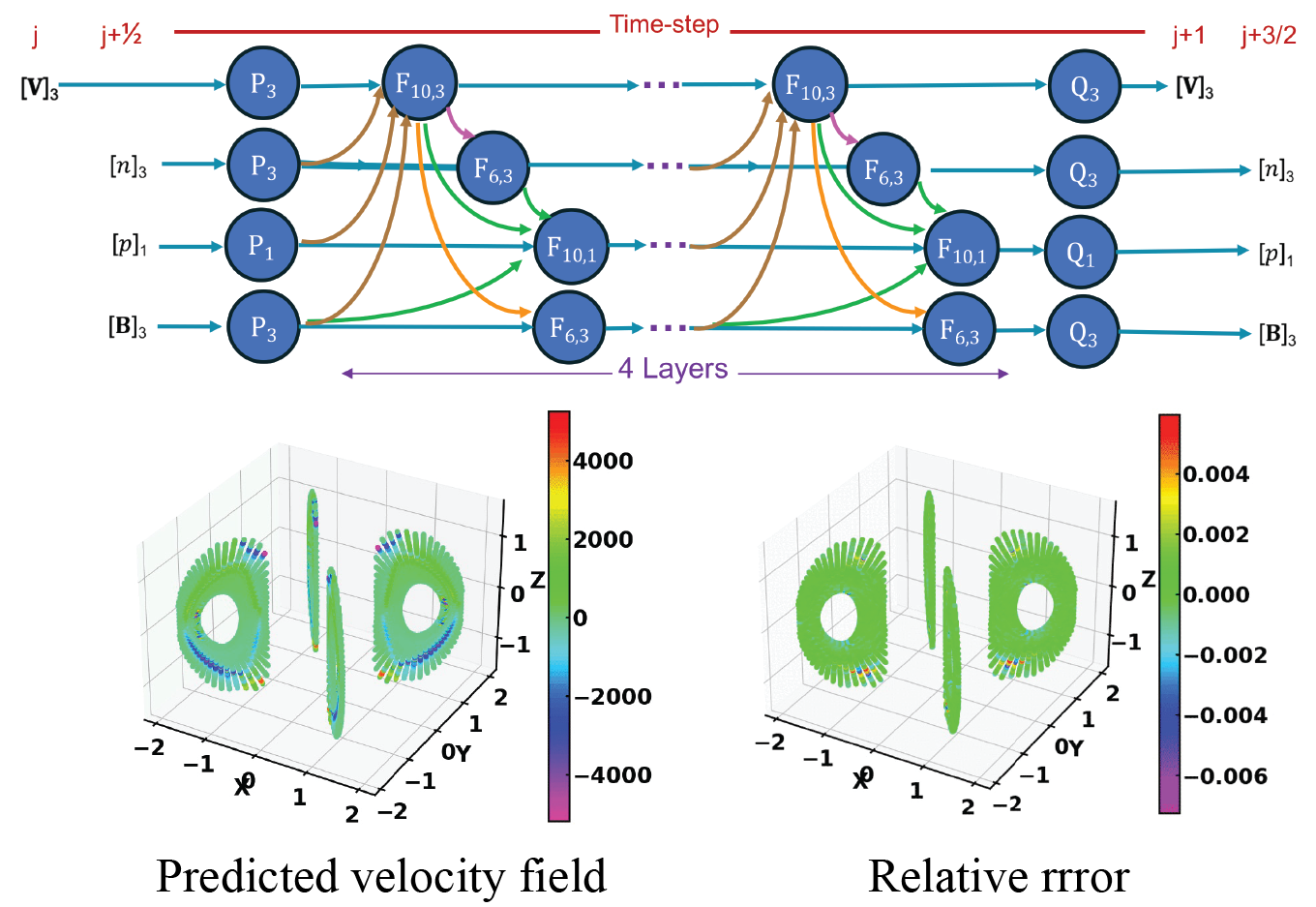

- Domain-aware neural operators for fusion simulations. Fusion simulations typically rely on particle-in-cell methods, spectral methods, or finite-element methods based on either Vlasov-type equations for fluid models. These simulation tools are very expensive for whole device tokamak or stellarator modeling. Our work develops novel neural operators as a cheap surrogate for first-principle numerical simulators. Our ML surrogate is based on Fourier neural operators (FNO) which typically requires less training samples, parameter counts compared to other ML tools, while maintaining high prediction accuracy. Our ML surrogate further leverages governing equations of a given fusion simulator to significantly reduce the number of network parameters, which we call fusion-FNO. The fusion-FNO can be used as preconditinoers for linear systems in fusion simulators, cheap diagnostic tools, or transfer learning tools.

- Statistical characterization of electromagnetic wave propagation in mine tunnels using multi-element probabilistic collocation method (ME-PC). The uncertainty quantification of EM wave propagation in realistic mine environments is very challenging due to inefficiency and inaccuracy of existing simulators and surrogate modeling tools. We leverage the ME-PC algorithm for the surrogate and a full-wave IE simulator (FMM-FFT-SIE) for computing the observables. The pertinent statistics can be then extracted via Monte-Carlo using the combined ME-PC and FMM-FFT-SIE approach.

Autotuning resuls using GPTune (top left) and its variants GPTuneBand (top middle), cGP (top right) and transfer learning (bottom left). Hybrid Bayesian + Downhill simplex optimization for RF accelerator modeling (bottom middle). Fusion-FNO as a cheap surrogate for tokamak modeling. (Click on the figures to zoom in).

Time-Domain Solvers for Electromagnetic and Multi-Physics Analysis

- Plane-wave-time-domain algorithm (PWTD)-accelerated time-domain integral equation (TDIE) solutions. The PWTD algorithm is the time domain counterpart of the frequency-domain fast multipole method (FMM) that is well-suited to accelerate the TDIE solutions for large transient electromagnetic problems. However, PWTD-TDIE solvers were not able to solve ultra-big EM problems as can be solved by the frequency-domain solvers due to stability and computation efficiency issues. This works develops provably scalable parallelization of the PWTD algorithm on modern computing architectures and explores the use of wavelet compression to reduce the computational costs. The resultant PWTD algorithms, when integrated into surface/volume integral equation solvers, enables electromagnetic characterization of real-life objects involving ten million/tens of million spatial unknowns, a significant improvement over past TDIE solvers. See the illustrations below.

Examples of PWTD-TDIE solutions: a PEC sphere (left) and helicopter (middle) using surface IE, and red blood cells (right) using volume IE (Click on the figures to zoom in).